By Jennifer Bresnick, HealthIT Analytics | September 17, 2018

Artificial intelligence is a hot topic in healthcare, sparking ongoing debate about the ethical, clinical, and financial pros and cons of relying on algorithms for patient care.

In what seems like the blink of an eye, mentions of artificial intelligence have become ubiquitous in the healthcare industry.

From deep learning algorithms that can read CT scans faster than humans to natural language processing (NLP) that can comb through unstructured data in electronic health records (EHRs), the applications for AI in healthcare seem endless.

But like any technology at the peak of its hype curve, artificial intelligence faces criticism from its skeptics alongside enthusiasm from die-hard evangelists.

Despite its potential to unlock new insights and streamline the way providers and patients interact with healthcare data, AI may bring not inconsiderable threats of privacy problems, ethics concerns, and medical errors.

Balancing the risks and rewards of AI in healthcare will require collaborative effort from technology developers, regulators, end-users, consumers – and maybe even philosophy majors.

READ MORE: At Montefiore, Artificial Intelligence Becomes Key to Patient Care

The first step will be addressing the highly divisive discussion points commonly raised when considering the adoption of some of the most complex technologies the healthcare world has to offer.

MRS. JONES, THE ROBOT WILL SEE YOU NOW…WHETHER YOU LIKE IT OR NOT

The idea that artificial intelligence will replace human workers has been around since the very first automata appeared in ancient myth.

From Hephaestus’ forge workers to the Jewish legend of the Golem, humans have always been fascinated with the notion of imbuing inanimate objects with intelligence and spirit – and have equally feared these objects’ abilities to be better, stronger, faster, and smarter than their creators.

For the first time in history, machine learning is letting data scientists and robotic engineers approach the threshold of true autonomous intelligence in everything from self-driving cars to the eerily dog-like SpotMini by Boston Dynamics.

The technologies supporting these breakthrough capabilities are also finding a home in healthcare, and physicians are starting to be concerned that AI is about to evict them from their offices and clinics.

READ MORE: 5 Steps for Planning a Healthcare Artificial Intelligence Project

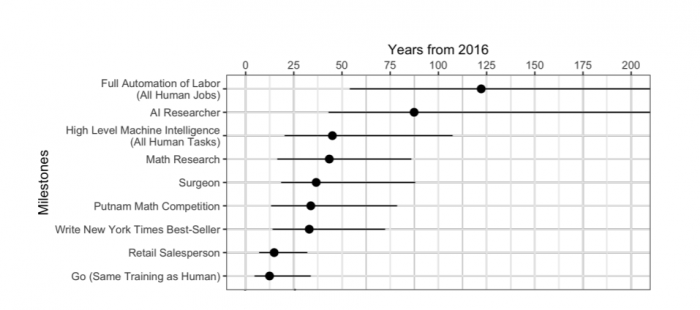

By 2053, surgical jobs could be the exclusive purview of AI tools, cautioned Oxford University and Yale University in a 2017 study.

Seventy-one percent of Americans surveyed by Gallup in early 2018 believe AI will eliminate more healthcare jobs than it creates.

Just under a quarter believe the healthcare industry will be among the first to see widespread handouts of pink slips due to the rise of machine learning tools.

Their fears may not be entirely unfounded.

In June of 2018, Babylon Health announced that an AI algorithm scored higher than humans on the written test on diagnostics used to certify physicians in the United Kingdom.

READ MORE: Artificial Intelligence Promises a New Paradigm for Healthcare

A year before that, a robot from a Chinese developer passed the Chinese national medical licensing exam with flying colors.

AI tools that consistently exceed human performance thresholds are constantly in the headlines, and the pace of innovation is only accelerating.

Source: Oxford Unviersity / Yale University

Radiologists and pathologists may be especially vulnerable, as many of the most impressive breakthroughs are happening around imaging analytics and diagnostics.

At the same time, however, one could argue that there simply aren’t enough radiologists and pathologists – or surgeons, or primary care providers, or intensivists – to begin with.

The US is facing a dangerous physician shortage, especially in rural regions, and the drought is even worse in developing countries around the world.

A little help from an AI colleague that doesn’t need that fifteenth cup of coffee during the graveyard shift might not be the worst idea for physicians struggling to meet the demands of high caseloads and complex patients, especially as the aging Baby Boomer generation starts to require significant investments in care.

AI may also help to alleviate the stresses of burnout that are driving physicians out of practice. The epidemic affects the majority of physicians, not to mention nurses and other care providers, who are likely to cut their hours or take early retirements rather than continue powering through paperwork that leaves them unfulfilled.

Automating some of the routine tasks that take up a physician’s time, such as EHR documentation, administrative reporting, or even triaging CT scans, can free up humans to focus on the complicated challenges of patients with rare or serious conditions.

The majority of AI experts believe that this blend of human experience and digital augmentation will be the natural settling point for AI in healthcare. Each type of intelligence will bring something to the table, and both will work together to improve the delivery of care.

For example, artificial intelligence is ideally equipped to handle challenges that humans are naturally not able to tackle, such as synthesizing gigabytes of raw data from multiple sources into a single, color-coded risk score for a hospitalized patient with sepsis.

But no one is expecting a robot to talk to that patient’s family about treatment options or comfort them if the disease claims the patient’s life. Yet.

EVEN IF AI DOESN’T REPLACE DOCTORS, IT WILL ALTER TRADITIONAL RELATIONSHIPS BEYOND RECOGNITION

It’s true that adding artificial intelligence to the mix will change the way patients interact with providers, providers interact with technology, and everyone interacts with data. And that isn’t always a good thing.

Physicians, nurses, and other clinicians care deeply about their relationships with patients, and prioritize facetime above most other aspects of the job.

They are also convinced that health IT use is the number one obstacle getting in the way.

Electronic health records are consistently blamed for interfering with the patient-provider relationship, sucking time away from already-limited appointments and preventing clinicians from picking up on non-verbal cues by keeping their eyes locked on their keyboards instead of the person in front of them.

Providers have relentlessly hammered regulators and health IT developers for perceived shortcomings in usability and design, and many are not convinced that artificial intelligence will do that much to change their negative perceptions of point-of-care technology tools.

The majority of the major EHR vendors have taken the opposite point of view. Most have gone all-in on artificial intelligence as a transformative technology that will rewrite workflows, simplify interactions with complex data, and give providers back the time they need to do much more of what they love.

Clinicians who have been burned by big promises before may not be rushing to believe that AI will solve all their EHR problems, but clinicians aren’t the only ones experiencing some satisfaction shortfalls.

Patients are also a major part of the equation, and they do not believe that all their traditional relationships with the healthcare system are worth preserving just as they are.

Making payments, scheduling appointments, conducting follow-up visits, or even waiting for a nurse to answer the phone to triage a low-level complaint all create friction in the patient-provider relationship. For individuals with chronic diseases requiring regular follow-up for the long term, these pain points may occur on a monthly basis.

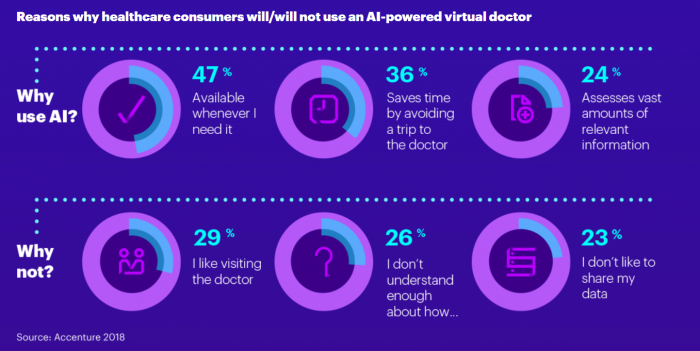

In a recent consumer survey conducted by Accenture, only 29 percent of patients said they wouldn’t use AI tools specifically because they prefer meeting with a doctor in person.

Sixty-one percent would use an artificially intelligent virtual assistant to handle financial transactions, schedule appointments, or explain their health insurance coverage options. And 57 percent would be interested in an AI health coach to help them manage wellness or chronic disease.

Source: Accenture

Somewhat fewer – around 50 percent – would be willing to trust an AI nurse or physician with diagnoses, treatment decisions, and other direct patient care tasks, but that is still a fairly high number of people interested in engaging with a novel technology for something as fundamental as their healthcare.

The increased comfort with AI technologies does not necessarily represent a decrease in the value patients place on a face-to-face with an empathetic, informed, and attentive physician.

But it does indicate a need for something to fill existing gaps in care access, expand the availability of information, and smooth over the rough edges of completing administrative tasks. Artificial intelligence is the most promising candidate to step into those roles.

That being said, voice-enabled virtual assistants, chatbots, and ambient computing devices are still in their infancy.

Natural language processing underpins many of these interactions. Anyone who has shouted into a cell phone trying to get an automated phone tree system to understand the word “representative” knows that the technology still has some maturing to do before it can accurately capture user queries – let alone return trustworthy and accurate medical information.

TRUST AND ACCURACY ARE ONLY (A BIG) PART OF THE PROBLEM – WHAT ABOUT THE PRIVACY AND SECURITY NIGHTMARE?

There’s an argument to be made that AI can’t be much worse than humans at the security game. After all, Alexa and Siri aren’t about to leave their laptops on the subway or tip paper medical records off the back of a truck.

But artificial intelligence presents a whole new set of challenges around data privacy and security – challenges that are compounded by the fact that most algorithms need access to massive datasets for training and validation.

Shuffling gigabytes of data between disparate systems is uncharted territory for most healthcare organizations, and stakeholders are no longer underestimating the financial and reputational perils of a high-profile data breach.

Most organizations are advised to keep their data assets closely guarded in highly-secure, HIPAA compliant systems. In light of an epidemic of ransomware and knock-out punches from cyberattacks of all kinds, CISOs have every right to be reluctant to lower their drawbridges and allow data to move freely into and out of their organizations.

Storing large datasets in a single location makes that repository a very attractive target for hackers.

Technologies like blockchain that may be able to keep personally identifiable information out of those data pools are promising, but are still new to the industry.

Consumers aren’t thrilled with the idea, either. In an April 2018 survey by SAS, only 35 percent of patients expressed even a little confidence that personal health data destined for AI algorithms was being stored securely.

However, consumers and experts alike acted exactly the same way when the idea of big data analytics first took hold in the healthcare industry, and attitudes about data sharing have evolved very rapidly.

In 2013, almost 90 percent of data scientists said patients should be very worried about the idea of healthcare organizations potentially bungling their privacy rights when conducing analytics of any kind.

Yet in 2018, patients are demanding the ability to swap EHR data through their cell phonesand spending hundreds of dollars to send genetic material through the mail without reading – or maybe not caring about – the fine print stating who gets to use that information down the line.

Security and privacy will always be paramount, but this ongoing shift in perspective as stakeholders get more familiar with the challenges and opportunities of data sharing is vital for allowing AI to flourish in a health IT ecosystem where data is siloed and access to quality information is one of the industry’s biggest obstacles.

Developers and data scientists need clean, complete, accurate, and multifaceted data – not to mention metadata – to teach their AI algorithms how to identify red flags and return highly useful results.

Currently, they simply don’t have it. At the beginning of 2018, half of respondents to an Infosys survey said their data is not ready to support their AI ambitions.

However, new policies and approaches to data curation, sharing, security, and reuse are starting to make data more available to more researchers at disparate organizations, and healthcare executives are beginning to invest much more heavily in building the data pipelines required to turn their data stores into actionable assets.

More than 40 percent of CIOs participating in an IDG poll at the beginning of 2018 were planning to bulk up their infrastructure and data science investments to enable artificial intelligence and machine learning.

Coupled with tone-setting policy from organizations like PCORI, Harvard University, and the ONC, as well as emerging technologies like blockchain, the healthcare industry may be starting to shape a workable approach to securing and sharing data across a brand new research ecosystem.

DATA USAGE POLICIES ARE GREAT, BUT WHAT ABOUT THE LARGER QUESTIONS OF ETHICS, RESPONSIBILITY, AND OVERSIGHT?

The thorniest issues in the debate about artificial intelligence are the philosophical ones. In addition to the theoretical quandaries about who gets the ultimate blame for a life-threatening mistake, there are tangible legal and financial consequences when the word “malpractice” enters the equation.

Artificial intelligence algorithms are complex by their very nature. The more advanced the technology gets, the harder it will be for the average human to dissect the decision-making processes of these tools.

Organizations are already struggling with the issue of trust when it comes to heeding recommendations flashing on a computer screen, and providers are caught in the difficult situation of having access to large volumes of data but not feeling confident in the tools that are available to help them parse through it.

While artificial intelligence is presumed to be completely free of the social and experience-based biases entrenched in the human brain, these algorithms are perhaps even more susceptible than people to making assumptions if the data they are trained on is skewed toward one perspective or another.

There are currently few reliable mechanisms to flag such biases. “Black box” artificial intelligence tools that give little rationale for their decisions only complicate the problem – and make it more difficult to assign responsibility to an individual when something goes awry.

When providers are legally responsible for any negative consequences that could have been identified from data they have in their possession, they need to be absolutely certain that the algorithms they use are presenting all of the relevant information in a way that enables optimal decision-making.

They aren’t yet convinced that AI has their back in the courtroom or the clinic. More than a third of participants in a 2017 HIMSS Analytics poll said that they are hesitant to adopt AI due to its immaturity.

Among early adopters of AI, half said that the technology had not been developed sufficiently to support reliable use across their organizations.

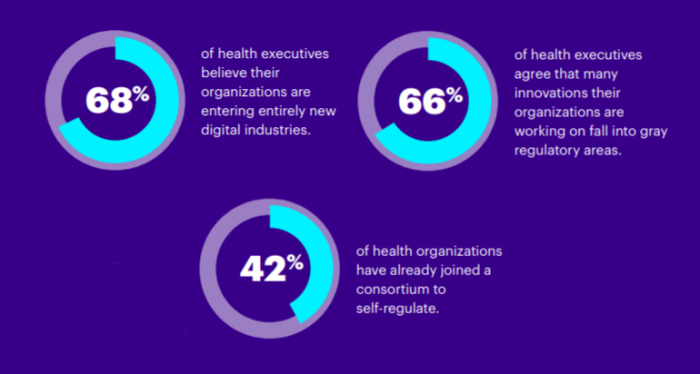

Source: Accenture

The good thing is that organizations recognize that they’re staring down a major ethical and legal conundrum. In a 2017 Accenture poll, two-thirds of healthcare executives acknowledged that many of their AI innovations fall into “regulatory gray areas.”

Forty-two percent had already taken steps to self-regulate, such as joining an industry consortium dedicated to navigating the emerging landscape.

Organizations like the FDA, the Clinical Decision Support (CDS) Coalition, and even Harvard University are offering guidance on how to move forward with AI in a safe, ethical, and sustainable manner that supports better patient care while avoiding doomsday scenarios of AI run amok.

And one can only hope that the looming giants of the broader technology universe, such as Amazon, Google, Apple, and Microsoft, will use their extensive powers for good as they extend their reach into the healthcare industry.

Ensuring that artificial intelligence develops ethically, safely, and meaningfully in healthcare will be the responsibility of all stakeholders: providers, patients, payers, developers, and everyone in between.

There are more questions to answer than anyone can even fathom. But unanswered questions are the reason to keep exploring – not to hang back.

The healthcare ecosystem has to start somewhere, and “from scratch” is as good a place as any.

Defining the industry’s approaches to AI is an unfathomable responsibility, but also a golden opportunity to avoid some of the mistakes of the past and chart a better path for the future.

It’s an exciting, confusing, frustrating, optimistic time to be in healthcare, and the continuing maturity of artificial intelligence will only add to the mixed emotions of these ongoing debates. There may not be any clear answers to these fundamental challenges at the moment, but humans still have the opportunity to take the reins, make the hard choices, and shape the future of patient care.