By Jessica Lipschitz and John Torous

We can’t seem to figure out how to make a good placebo for controlled studies.

The phrase there’s an app for that has gone from stunning claim to cliché to fact of life. We use apps for everything from finding romantic partners to safeguarding our health to getting an eyeglasses prescription. But when it comes to health-related apps, whether they claim to treat depression or diabetes, determining what is effective is often difficult. That’s troublesome, because therapeutic apps have enormous potential to reduce the cost of health care—if only we can determine whether they work. Like everything else in health care, determining the impact of an app requires rigorous, well-planned studies. And that brings us to one of the field’s biggest challenges: It’s really hard to come up with a placebo for an app.

The randomized controlled trial is the long-standing scientific practice to isolate the impact of a new medical treatment. These trials involve randomly assigning participants to either the treatment group, where they receive the experimental intervention, or a control group, where they receive what is called a control condition. Control conditions can be established treatments, no treatment at all (sometimes called the wait list), or a placebo. Often, a placebo is a sugar pill that is matched in size and color to the experimental medication but does not deliver any biologically active agents. After the intervention, the outcomes for the two groups are compared to see whether the thing being studied made a difference.

Placebo control groups are the gold standard because participants don’t know whether they are in the treatment group or the control group. That means they have the same expectations for improvement and side effects as the treatment group. And in fact, these expectations themselves often have a positive impact on the outcome of interest—what’s known as the placebo effect. This study design also controls for the fact that some people in both groups may naturally improve on their own, without intervention.

That’s why we need a sugar-pill equivalent for digital health. Without placebos, we can’t know the actual impact of a treatment because we have not controlled for the known impact of user expectations on outcomes. This is well illustrated in a recent review of 18 randomized controlled trials evaluating the effectiveness of smartphone apps for depression. In studies where a smartphone app was compared with a waitlist control condition, the app appeared moderately effective in reducing depressive symptoms. But when participants were assigned to use apps compared with active control groups—like journaling or using other apps that researchers did not expect would work—the comparative effectiveness of smartphone apps being studied fell 61 percent. This difference is consistent with what would be expected based on the placebo effect.

A recent study of the popular meditation app Headspace demonstrates the complexity of the digital placebo. In this study, a sham meditation app used the Headspace interface and offered users breathing exercises that were referred to as meditation and were guided by Headspace co-founder Andy Puddicombe. But it didn’t include discussion about the core tenets of mindfulness or offer varied progressive forms of guided mindfulness—there was just one exercise.

The result? The researchers found that mindfulness improved in participants in both groups. There was no added benefit from introducing the concept of mindfulness practice or from offering progressive and varied mindfulness exercises, instead of just one.

In some senses, this was an ideal placebo because the apps were so similar. But those similarities also mean that the placebo may have offered many of the likely “active ingredients” of the fuller Headspace app. Instead of being a study of the Headspace app itself, then, it suggested that mindfulness instruction and content variation probably do not drive the impact that may be observed in a mindfulness app like Headspace. The study also adds to other nonplacebo controlled research indicating that Headspace may increase mindfulness and decrease depression—but, again, we cannot draw clear conclusions on effectiveness from this study.

The Headspace study highlights how it can be particularly challenging to identify a sugar-pill equivalent in digital health, but designing a placebo is hard throughout the field of behavioral health, where, as you can imagine, it is difficult to deliver “sham” therapy. This means that nonplacebo control groups (e.g., wait list or treatment-as-usual) that do not control for participant expectations are common. It also means that we often compare our experimental treatments to options that we know contain active components of the test intervention. For example, we may compare eight sessions of cognitive behavioral therapy to eight sessions of supportive therapy. Both conditions here provide eight hours of validation and support from a licensed clinician, but in contrast to supportive therapy, which is largely unstructured, cognitive behavioral therapy focuses on specific exercises aimed at changing thought and behavior patterns that may be maintaining the psychological problem. The issue is that the supportive therapy delivers one of the active components of the cognitive behavioral therapy—therapist-patient connection—so this design will make the impact seem smaller.

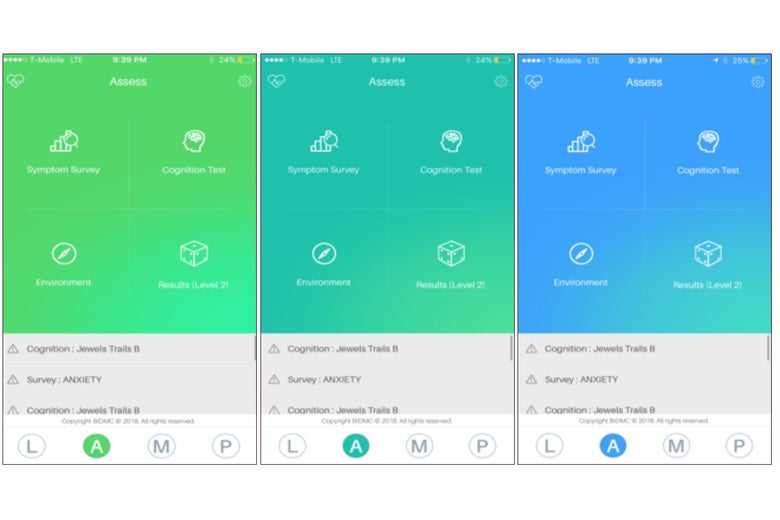

To make matters slightly more complicated, seemingly little things can impact the placebo effect. For example, in medication trials, it is well established that the color of a sugar pill makes a difference. Something similar could happen with digital health apps. For example, the images below are from a research app being studied by our team where background color can be changed with the click of a button. The hypothesis being tested: Background screen color impacts mood symptoms reported to the app. Part of running high-quality digital health randomized control therapies will involve understanding and accounting for the variables, even the unexpected or seemingly inconsequential ones, that impact the placebo effect.

So as a consumer, which health apps should you trust and use? More high-quality randomized controlled trials are coming, and the Headspace study was a step in the right direction. In the meantime, we can look at the existing evidence and conclude that people using many apps, Headspace included, seem to achieve improvement. Of course, for serious health concerns diagnosis by a medical professional and strong therapeutic relationships are still the gold standard, but more and more so apps are a worthwhile supplement. So, while the medical science behind apps evolves, the best evidence may still be how useful you find the app to be for yourself.